Lecture 1

Welcome!

Agenda

10:10 Introductions

10:30 Course Rhythm/Roadmap

10:45 Syllabus

11:00 R Setup

11:15 Activity

11:45 Introduction to Time Series Models

Extra time: Get started on Exercises

Introductions

Arrange yourselves into groups of 3-4 and share the following:

Name

Major

Whether you spend more time thinking about the past or the future

Whether you are more certain when you think about the past or the future

Please be prepared to share summary data with the class!

About Me (Professional)

Cal Poly SLO B.S. in Statistics and Pure Math

PhD (and MA) in Statistics from Rice University

Expertise: Spatial/Spatiotemporal Statistics (specifically spatial weight matrices)

Also did graduate certificate in teaching and learning

1.5 years authoring online interactive course material for zyBooks/Wiley (EdTech portion Higher Education industry)

1.5 years managing a team of Statistics authors at zyBooks/Wiley

1.5 years as Research Scientist (wastewater epidemiology) at Rice University

Teaching Philosophy

Minimize yapping

Promote collaboration

Provide varied opportunities for feedback

Course Rhythm

Assignments due Monday nights at Midnight (11:59:59 PM)

New assignments posted Mondays at 5pm

Quizzes due Thursday nights at midnight

One Midterm on Wednesday October 23, in class

One cumulative final exam

Section 1 (10am): Wednesday, December 11 from 10am-1pm

Section 2 (2pm): Friday, December 13 from 1pm-4pm

Syllabus

Software Setup

Installing R

Select your operating system

Follow the download instructions

Installing RStudio

Step 2 should have a clickable link with your operating system (auto-detected)

Follow the download instructions

Getting Started

In R Studio, Click File –> New –> Quarto Document

Title it Lecture 1 Notes

Delete the template material

Add setup chunk

Activity

Group time!

Split into groups of 4, I will come around and assign an example to you

In your quarto document, create a heading with a title of your example and “Equations” and “Visualizatons”

Read over the description of the example (access the book through Canvas)

install and load theastsapackage.Copy the R code from https://github.com/nickpoison/tsda/blob/main/Rcode.md#chapter-1

Run the R code and verify whether you can reproduce the figure from the text

Write down a research question that could be answered using the time series data for your example

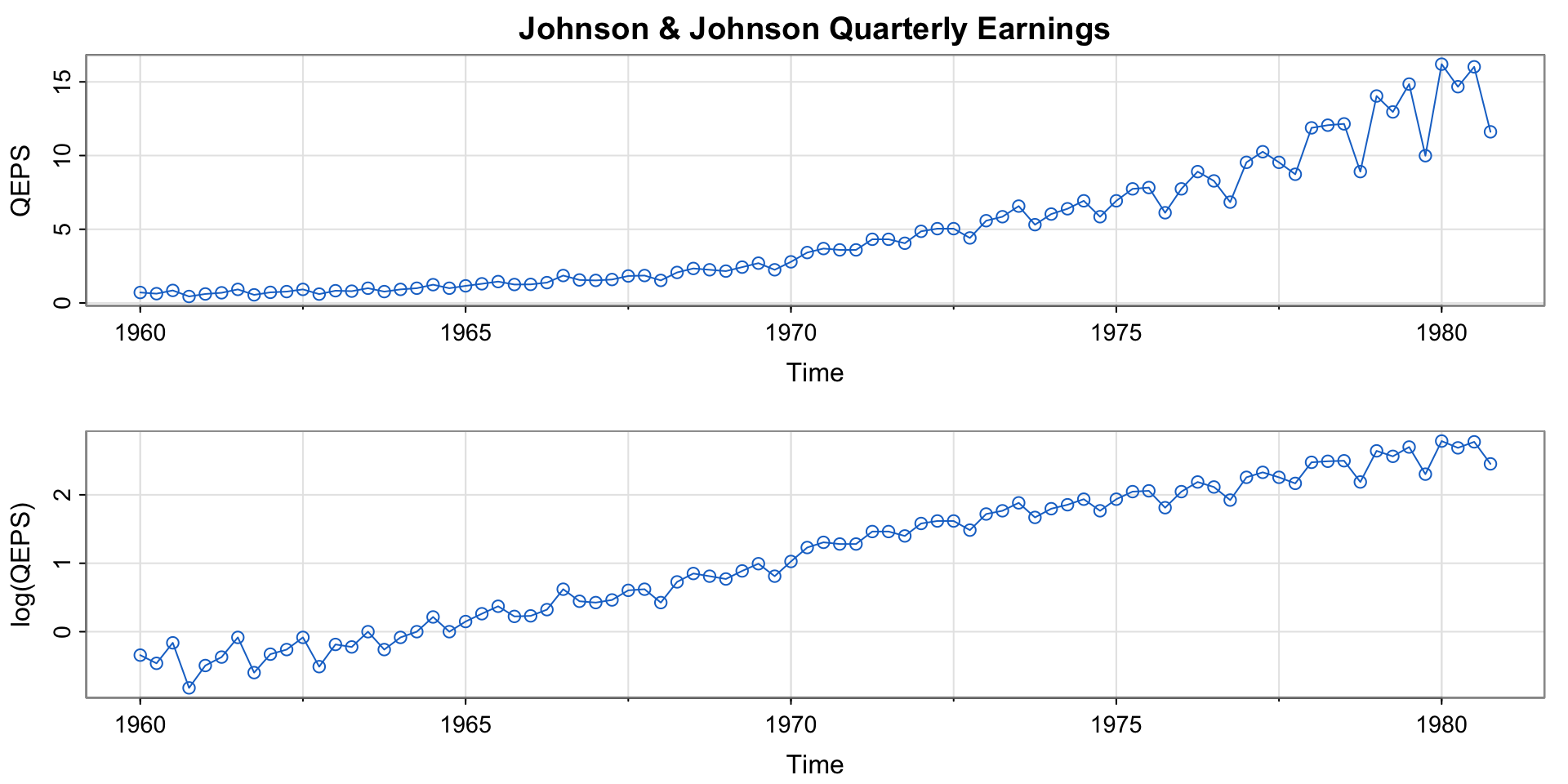

Example 1.1 (Quarterly Earnings)

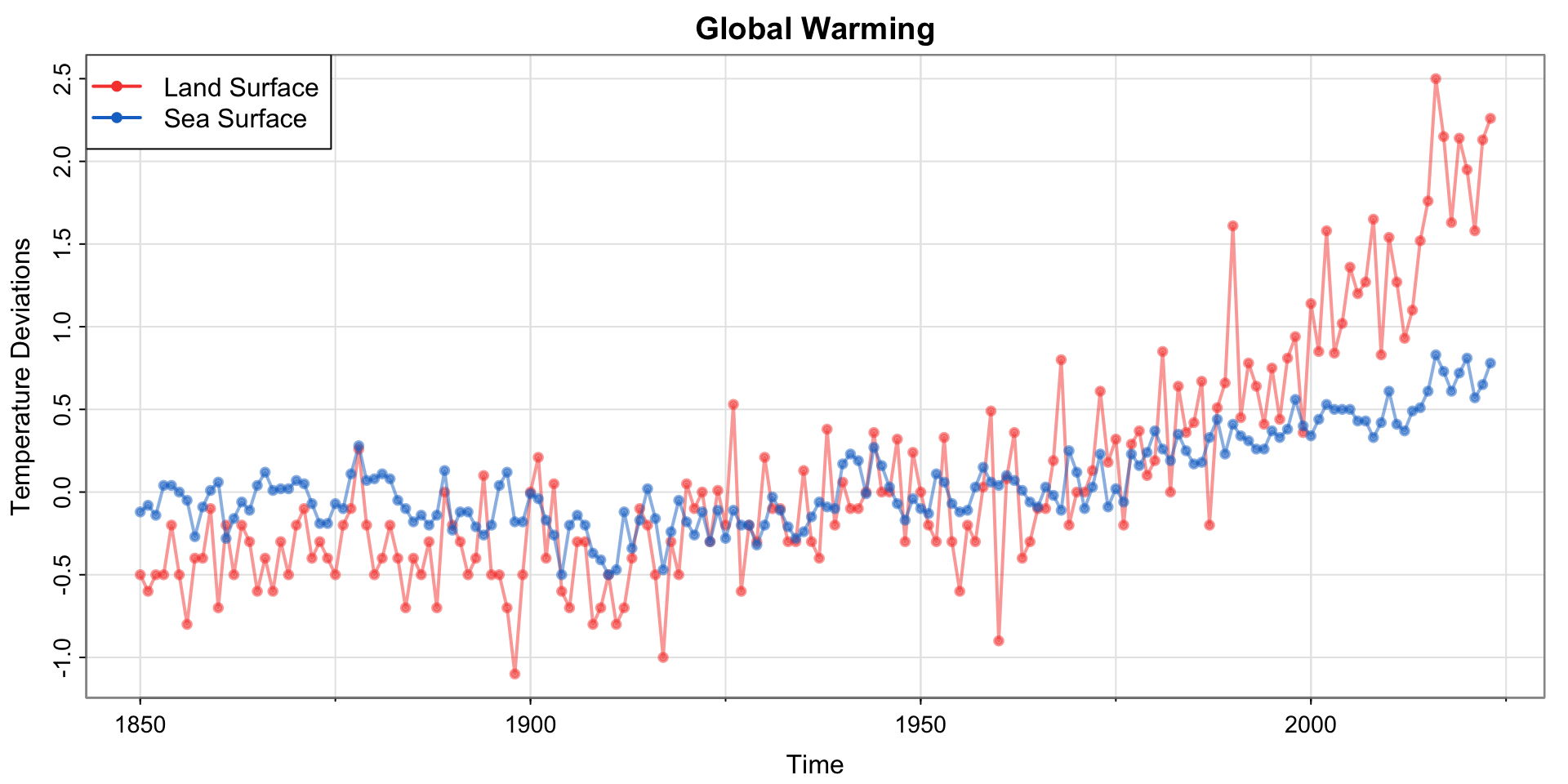

Example 1.2 (Climate Change)

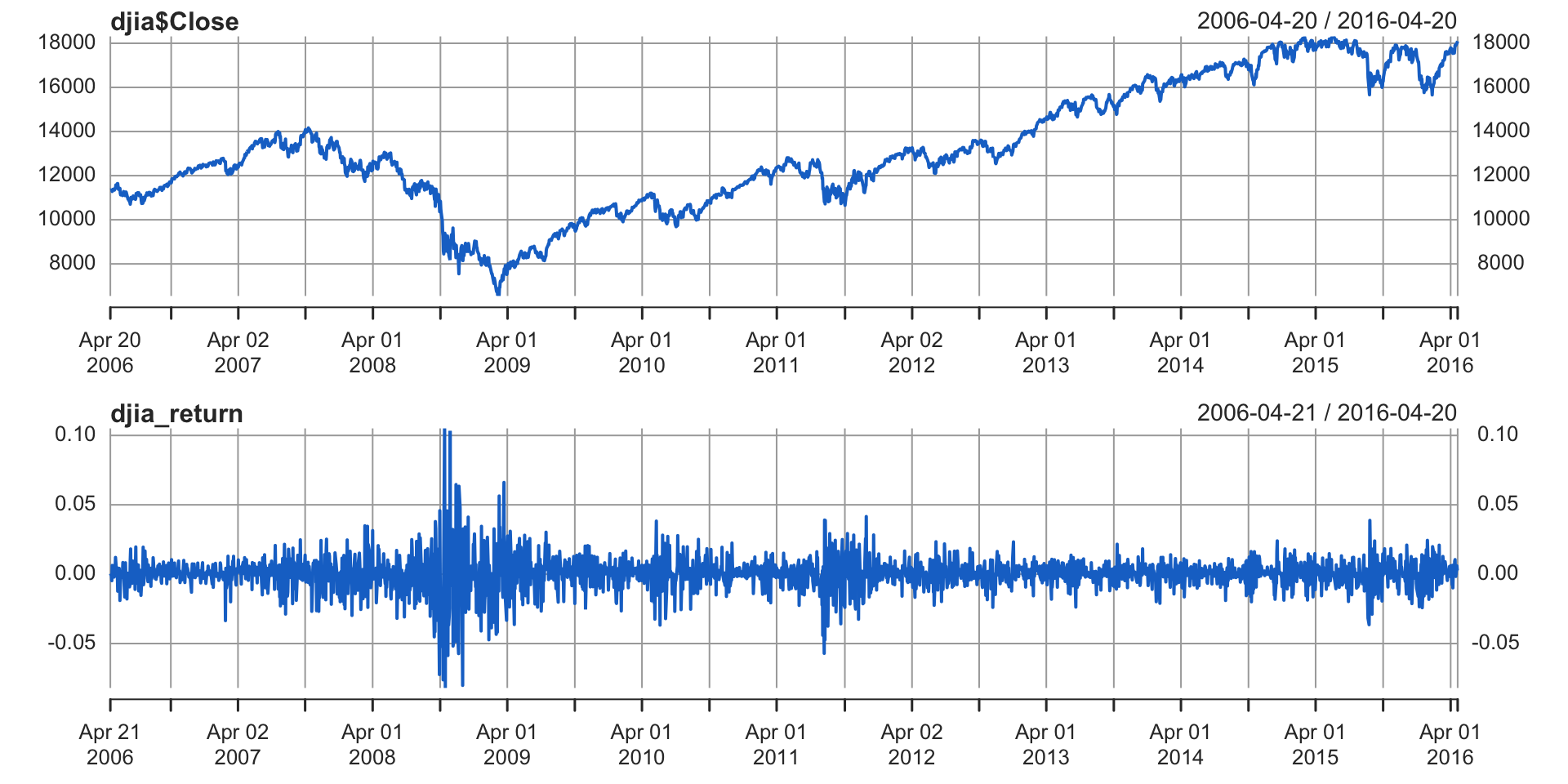

Example 1.3 (Dow Jones Industrial Average)

Code

Code

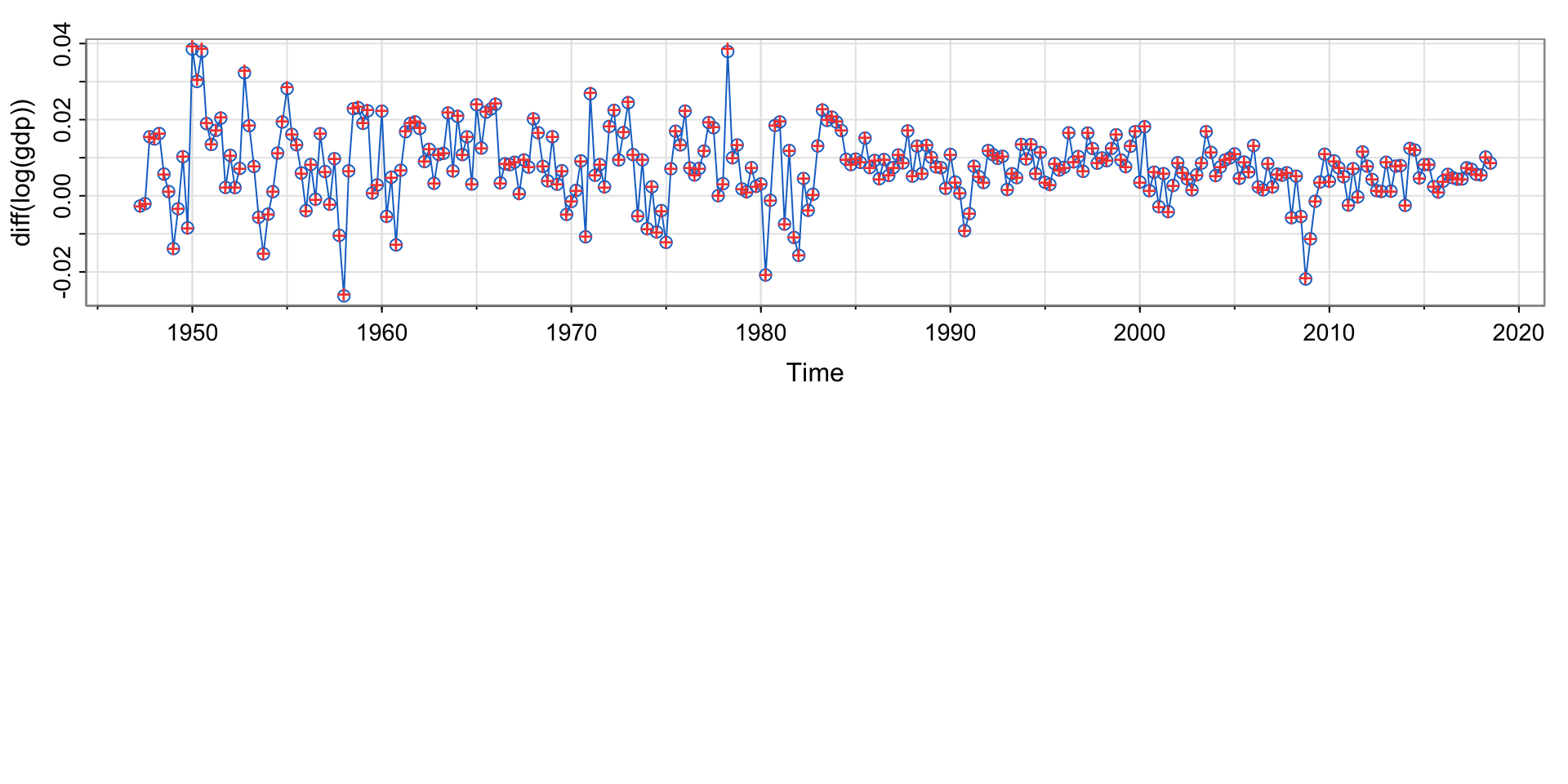

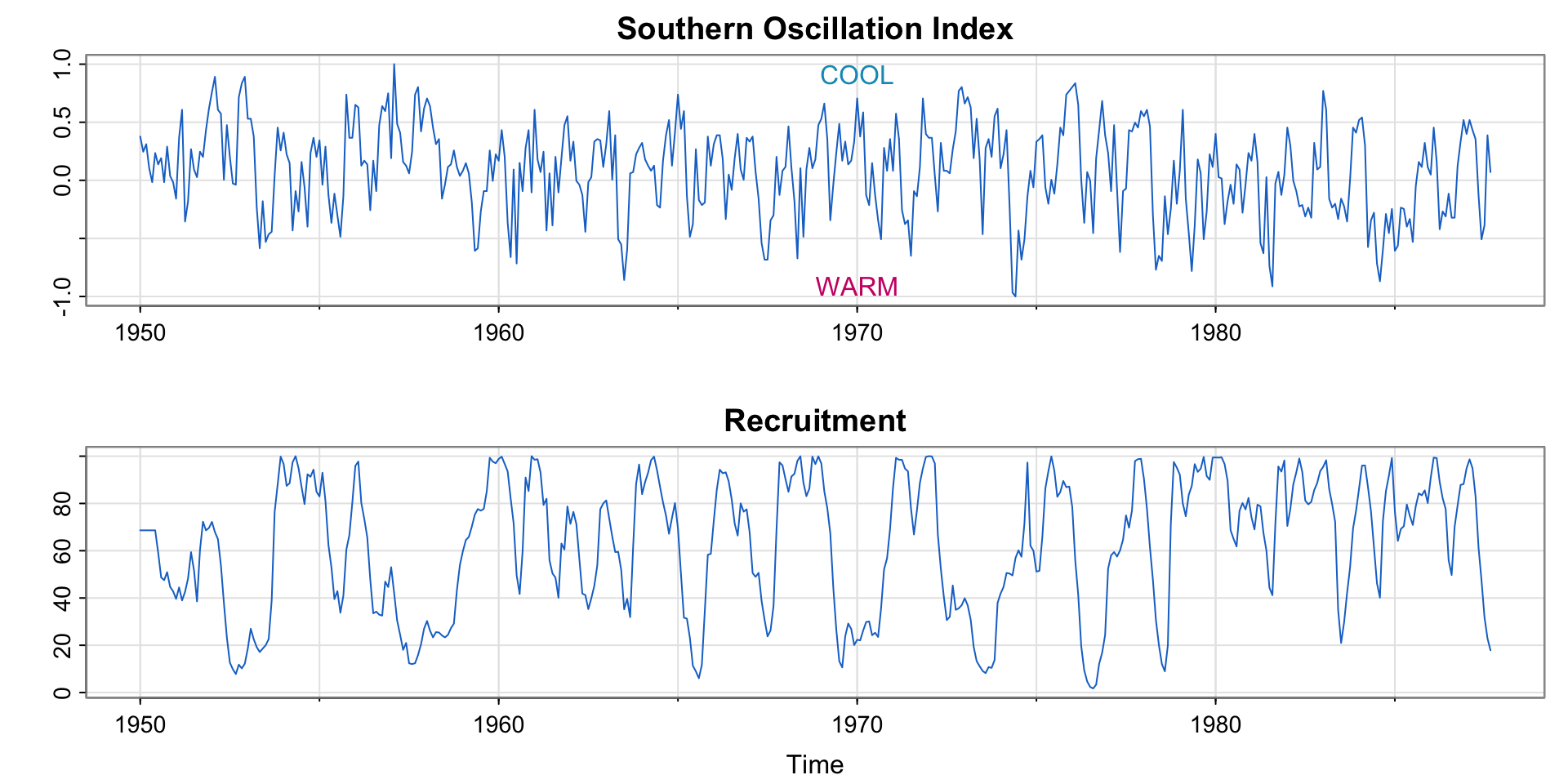

Example 1.4 El Niño

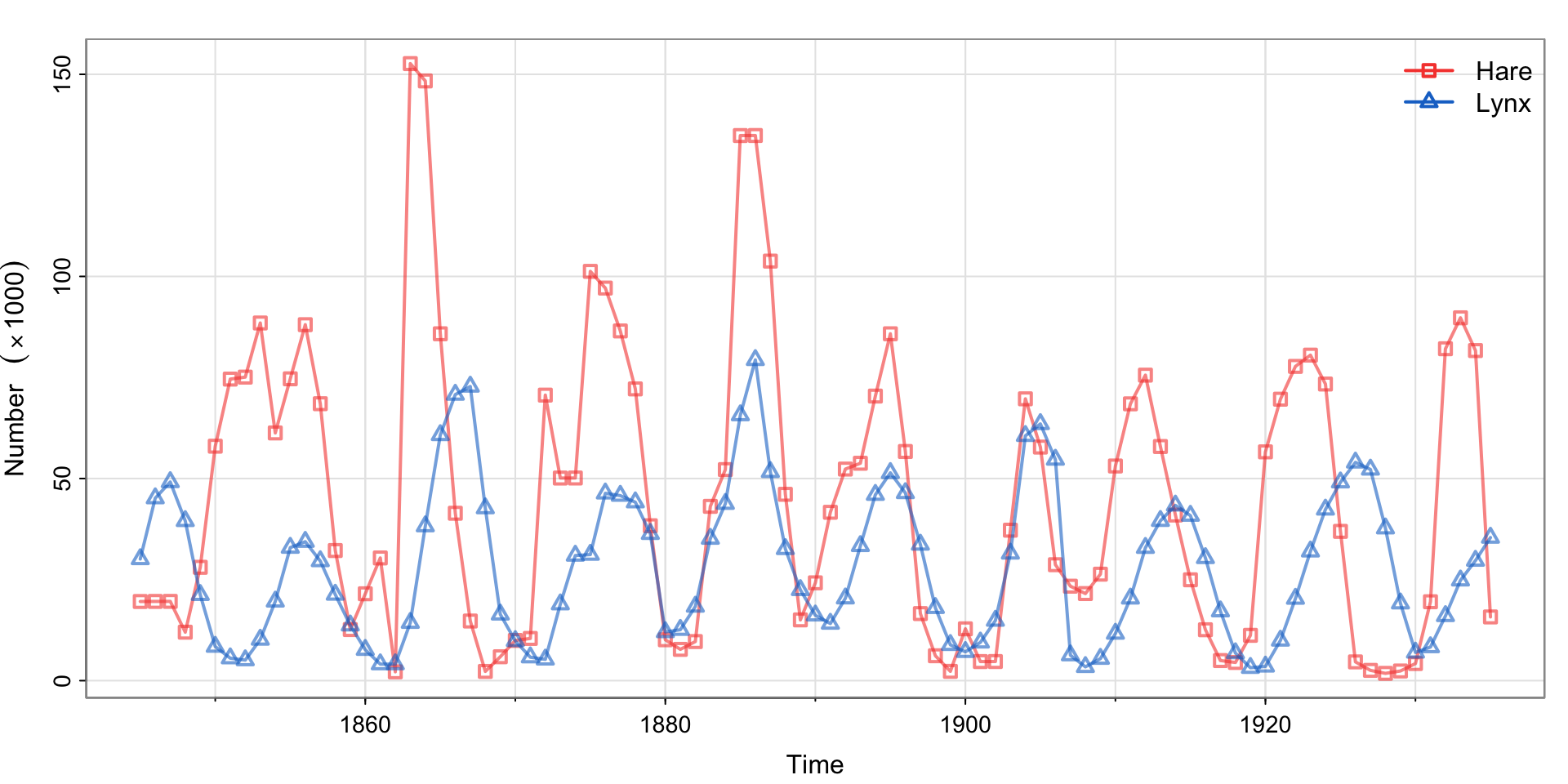

Example 1.5 (Predator-Prey Interactions)

Cute animal pictures

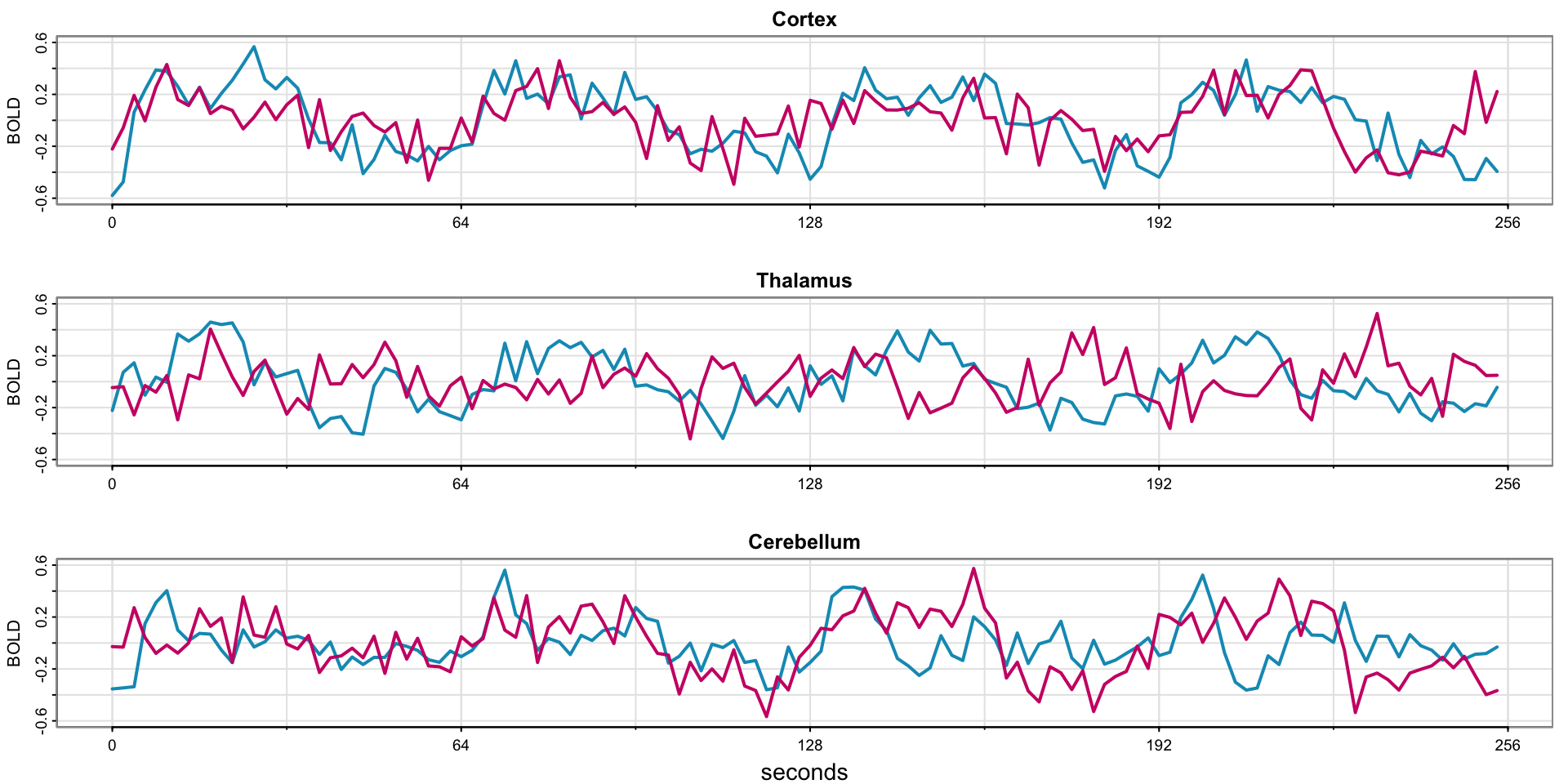

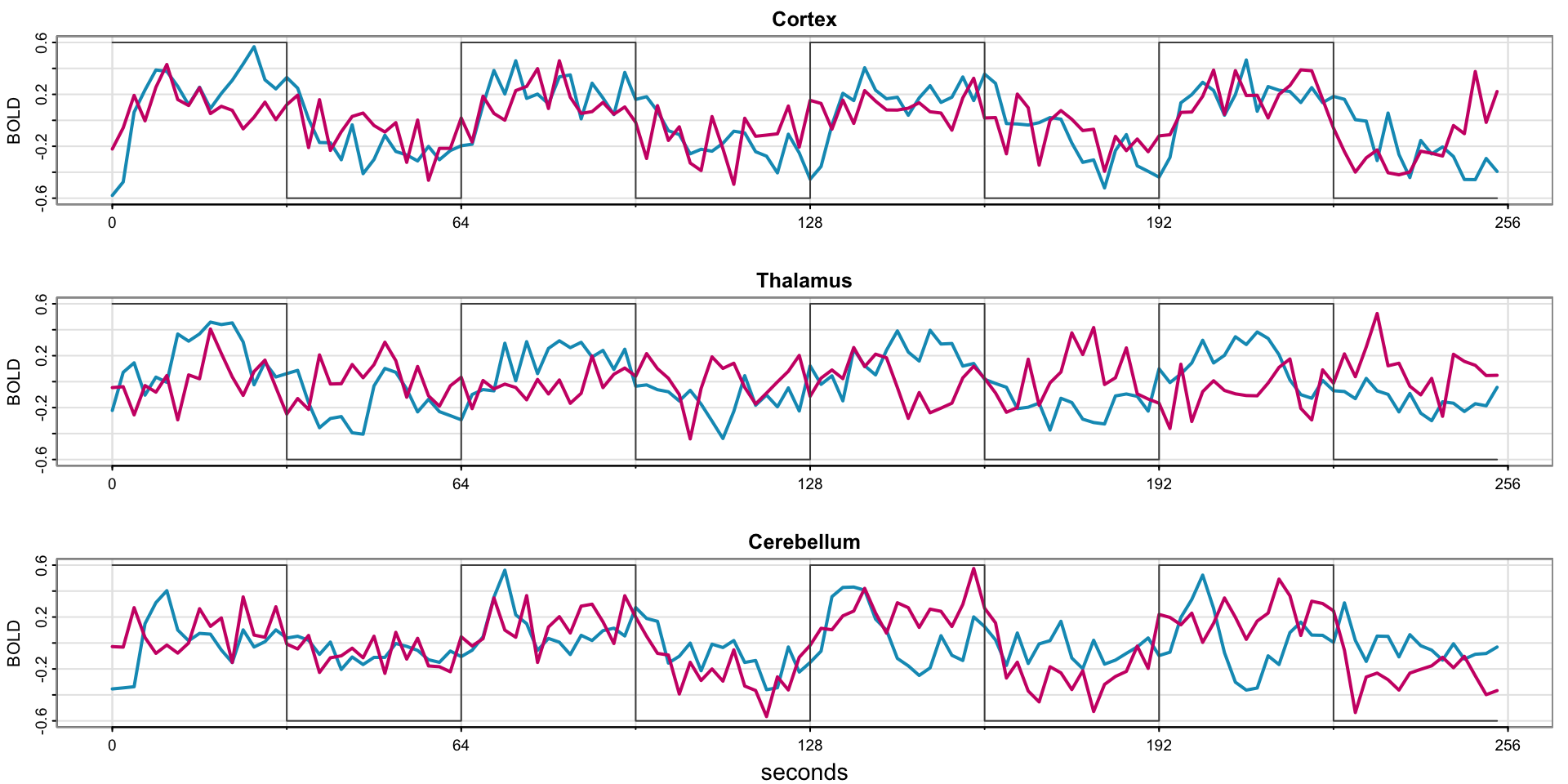

Example 1.6 fMRI Imaging

Code

par(mfrow=c(3,1))

x = ts(fmri1[,4:9], start=0, freq=32) # data

names = c("Cortex","Thalamus","Cerebellum")

u = ts(rep(c(rep(.6,16), rep(-.6,16)), 4), start=0, freq=32) # stimulus signal

for (i in 1:3){

j = 2*i-1

tsplot(x[,j:(j+1)], ylab="BOLD", xlab="", main=names[i], col=5:6, ylim=c(-.6,.6),

lwd=2, xaxt="n", spaghetti=TRUE)

axis(seq(0,256,64), side=1, at=0:4)

#lines(u, type="s", col=gray(.3))

}

mtext("seconds", side=1, line=1.75, cex=.9)

Code

par(mfrow=c(3,1))

x = ts(fmri1[,4:9], start=0, freq=32) # data

names = c("Cortex","Thalamus","Cerebellum")

u = ts(rep(c(rep(.6,16), rep(-.6,16)), 4), start=0, freq=32) # stimulus signal

for (i in 1:3){

j = 2*i-1

tsplot(x[,j:(j+1)], ylab="BOLD", xlab="", main=names[i], col=5:6, ylim=c(-.6,.6),

lwd=2, xaxt="n", spaghetti=TRUE)

axis(seq(0,256,64), side=1, at=0:4)

lines(u, type="s", col=gray(.3))

}

mtext("seconds", side=1, line=1.75, cex=.9)

Introduction to Time Series Models

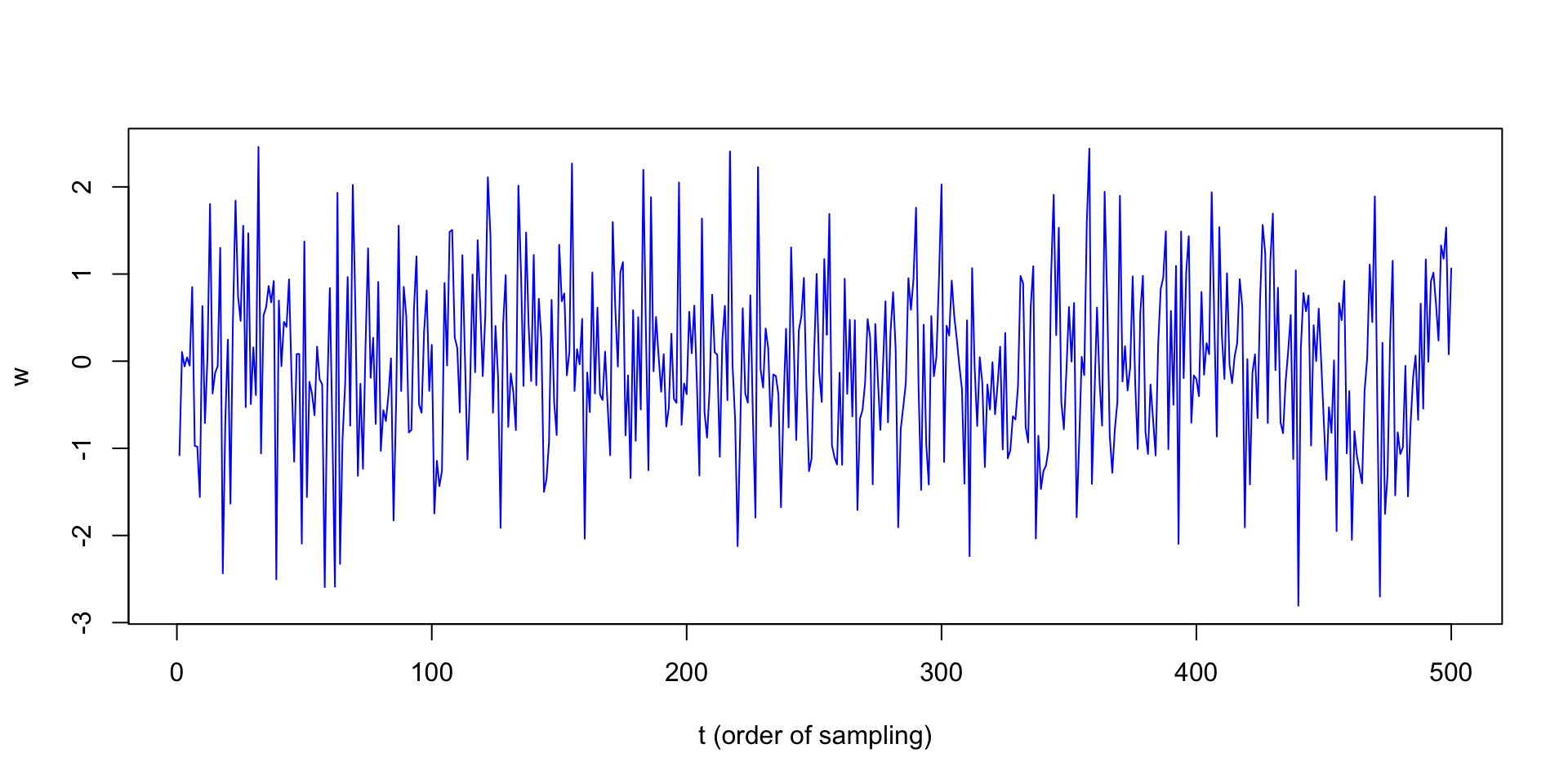

White Noise

in general, a collection of random variables \(w_t\)

uncorrelated

mean 0, variance \(\sigma_w^2\)

denoted \(w_t \sim wn(0, \sigma_w^2)\)

for us, usually independent and identically distributed (i.i.d.) normal

- \(w_t \sim \text{iid } N(0, \sigma_w^2)\)

Plotting White Noise

Which example does this bear the most resemblance to?

What White Noise isn’t

serially correlated – no temporal structure

smooth – “nice” trend/temporal structure

How can we build this “nice” structure into the model?

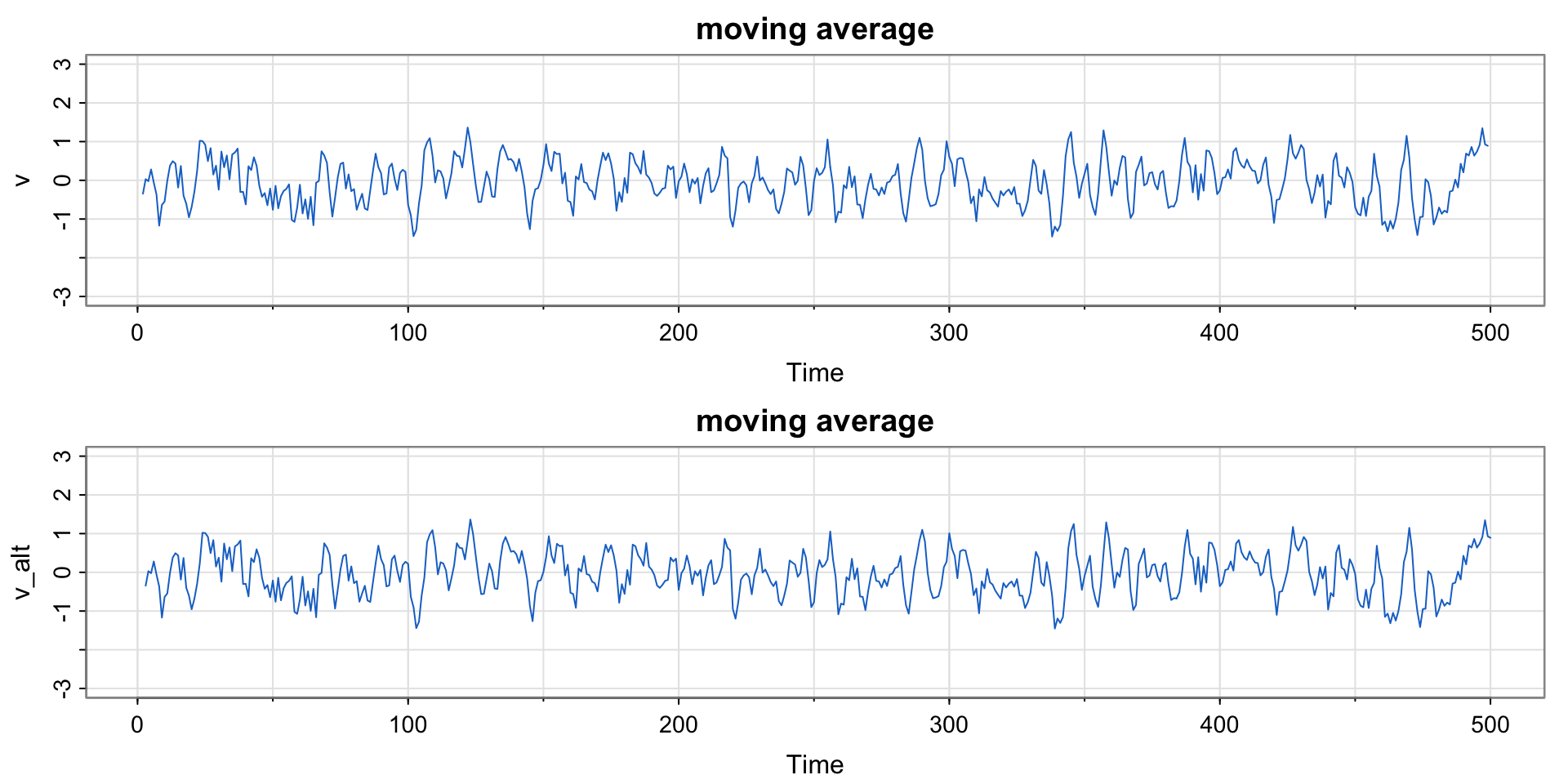

Moving Averages, Smoothing, and Filtering

Replace \(w_t\) with an average of its current value and two previous values:

\[ v_t = \frac{1}{3}(w_{t-2} + w_{t-1} + w_{t}) \]

Why do we divide by 3?

If \(w_t \sim \text{iid } N(0, \sigma_w^2)\), what is the distribution of \(v_t\)?

Why only the previous two values? Why not one in the past and one in the future?

Plotting a Moving Average

Compare this moving average to the SOI and Recruitment series. How do they differ?

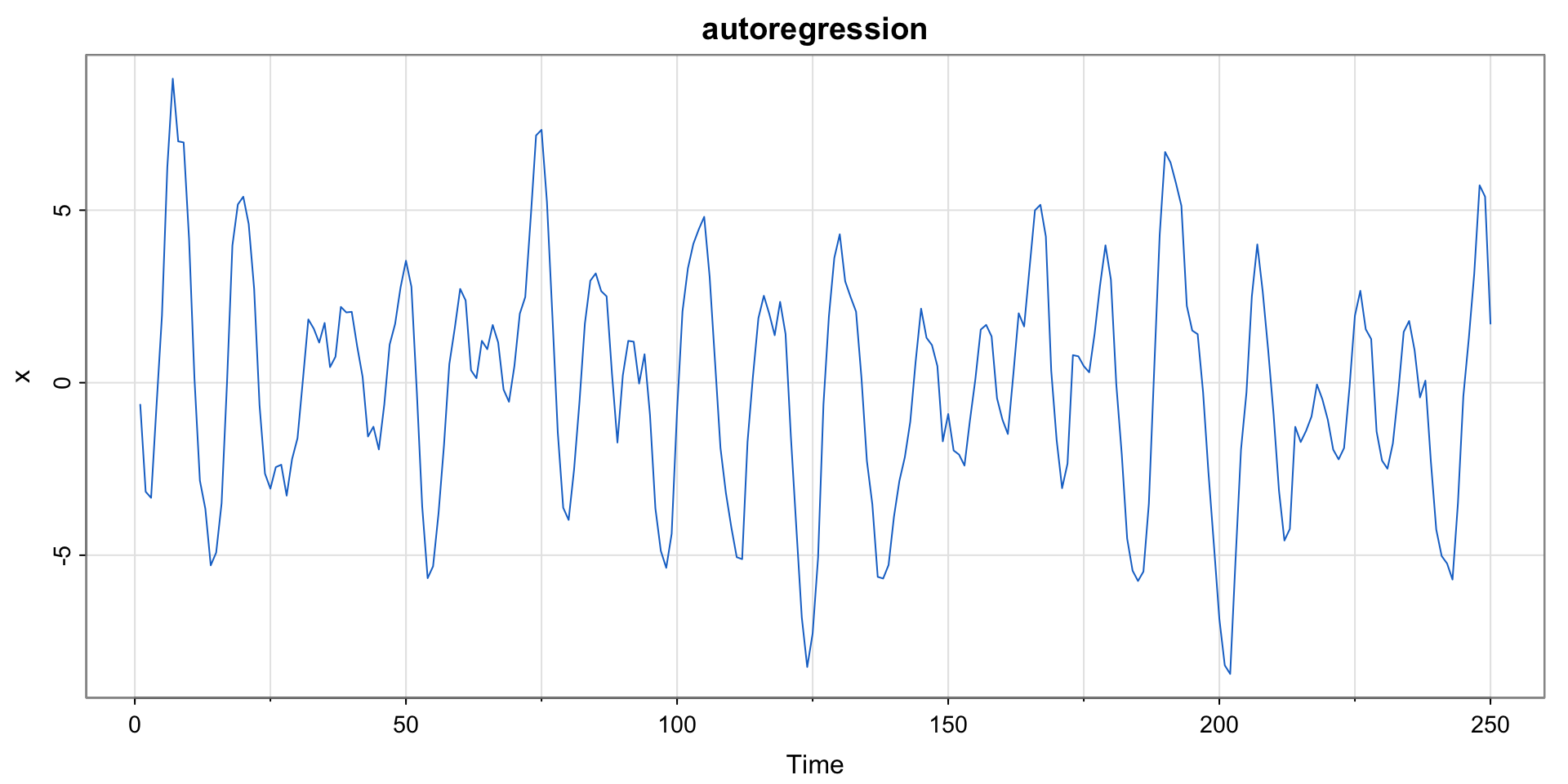

Autoregressions

Starting with white noise \(w_t\), consider the equation:

\[ x_t = 1.5x_{t-1} - 0.75x_{t-2} + w_t \]

a “second-order equation” (why?)

A regression of the current value \(x_t\) of a time series as a function of the past two values of the series

recall (multiple) regression of \(Y\) on \(X = (X_1, X_2)\) is \(Y = \beta_0 + \beta_1X_1 + \beta_2X_2 + \varepsilon\) and compare to autoregression formula above

See (or hear) details in textbook page 11

Plotting Autoregressions

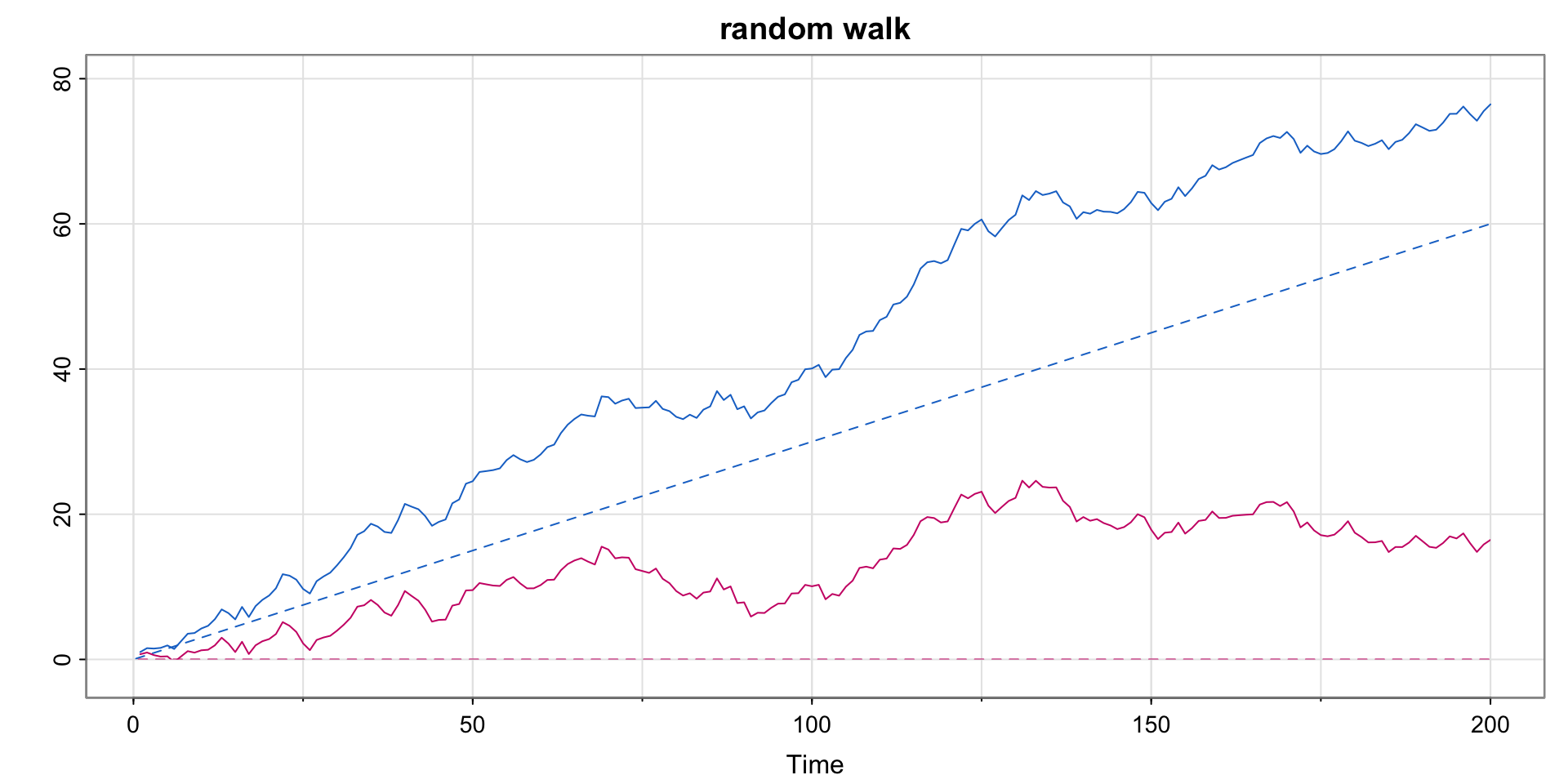

Random Walk with Drift

Again starting with white noise \(w_t \sim wn(0, \sigma^2_2)\), consider the time series

\[ x_t = \delta + x_{t-1} + w_t \]

This is called the “random walk with drift” model.

\(\delta\) is the drift term (\(\delta = 0\) corresponds to “random walk”- no drift)

initial condition \(x_0 = 0\)

Can be rewritten

\[ x_t = \delta t + \sum_{j=1}^t w_j \]

Plotting a Random Walk with Drift

Code

set.seed(314159265) # so you can reproduce the results

w = rnorm(200) ## Gaussian white noise

x = cumsum(w)

wd = w +.3

xd = cumsum(wd)

tsplot(xd, ylim=c(-2,80), main="random walk", ylab="", col=4)

clip(0, 200, 0, 80)

abline(a=0, b=.3, lty=2, col=4) # drift

lines(x, col=6)

clip(0, 200, 0, 80)

abline(h=0, col=6, lty=2)

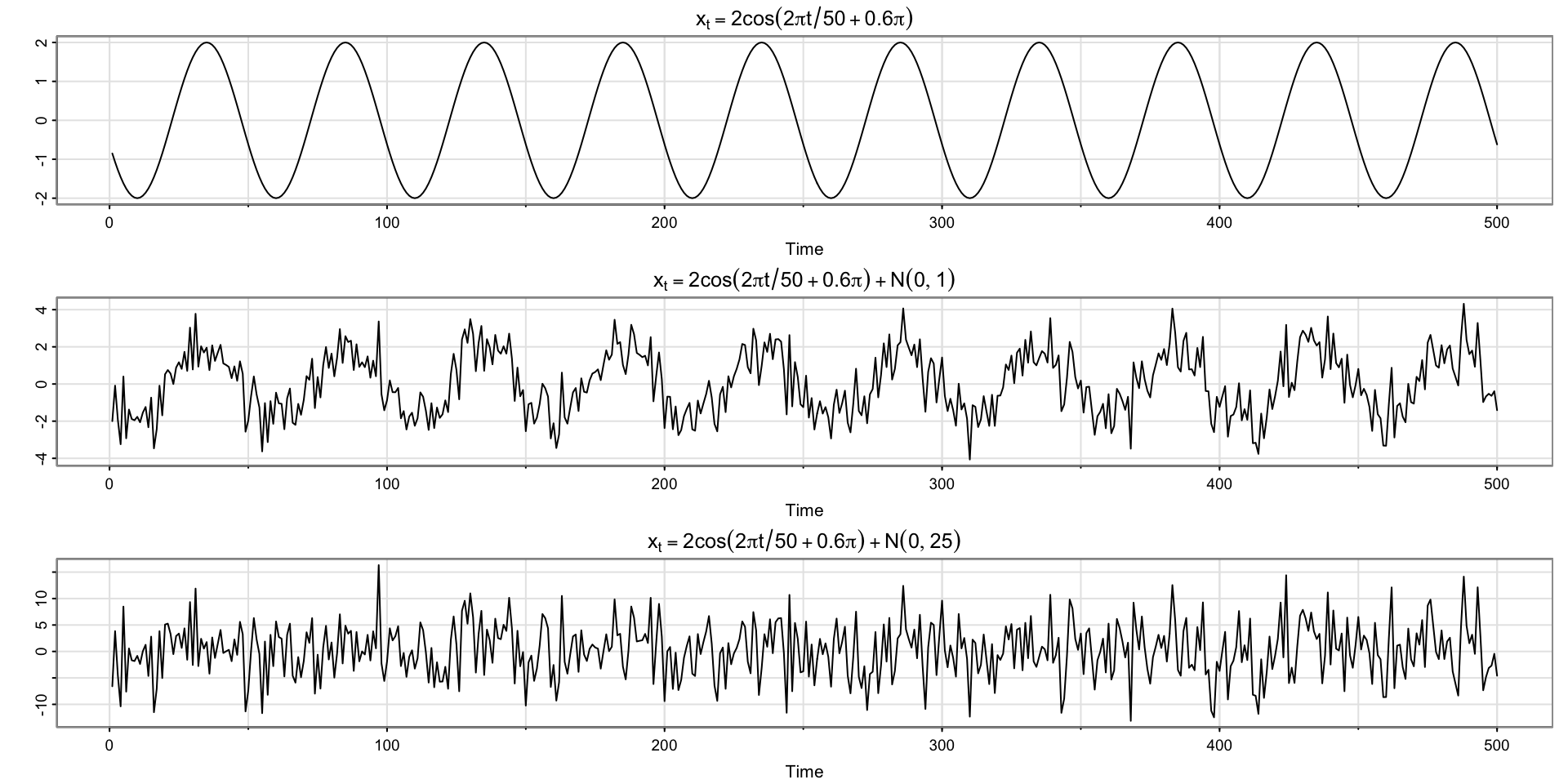

Signal Plus Noise

Consider the model:

\[ x_t = 2\cos(2\pi\frac{t + 15}{50}) + w_t \]

\(2\cos(2\pi\frac{t + 15}{50})\) is the signal

\(w_t\) is the noise

Plotting Signal Plus Noise (two scenarios)

Code

# cs = 2*cos(2*pi*(1:500)/50 + .6*pi) # as in the text

cs = 2*cos(2*pi*(1:500+15)/50) # same thing

w = rnorm(500,0,1)

par(mfrow=c(3,1))

tsplot(cs, ylab="", main = expression(x[t]==2*cos(2*pi*t/50+.6*pi)))

tsplot(cs + w, ylab="", main = expression(x[t]==2*cos(2*pi*t/50+.6*pi)+N(0,1)))

tsplot(cs + 5*w, ylab="", main = expression(x[t]==2*cos(2*pi*t/50+.6*pi)+N(0,25)))

Next Time

Exercises at the end of chapter 1

Start Chapter 2

- Review definition of covariance, correlation, expected value, and variance (good use of AI– prompt then Wikipedia?)