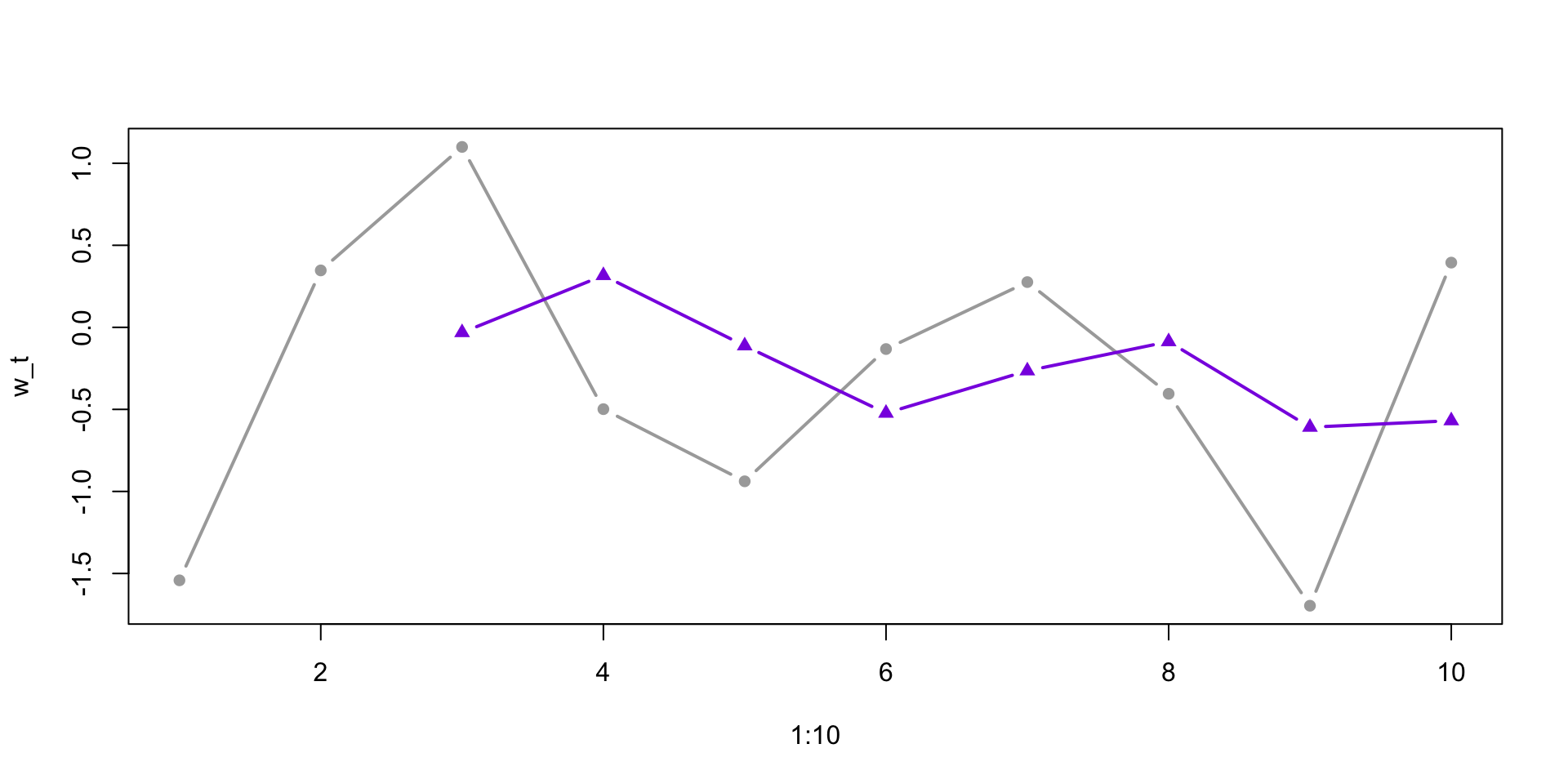

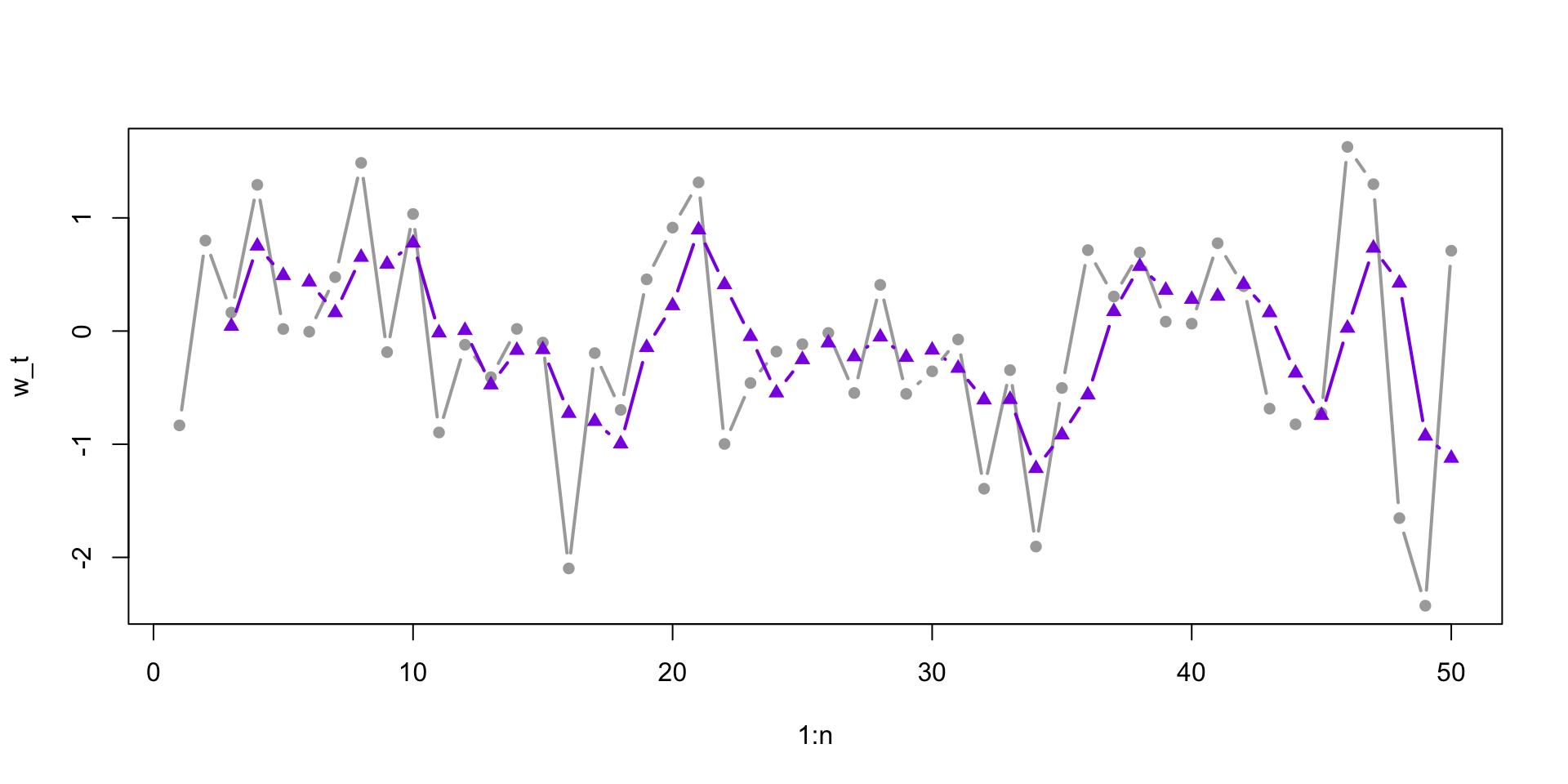

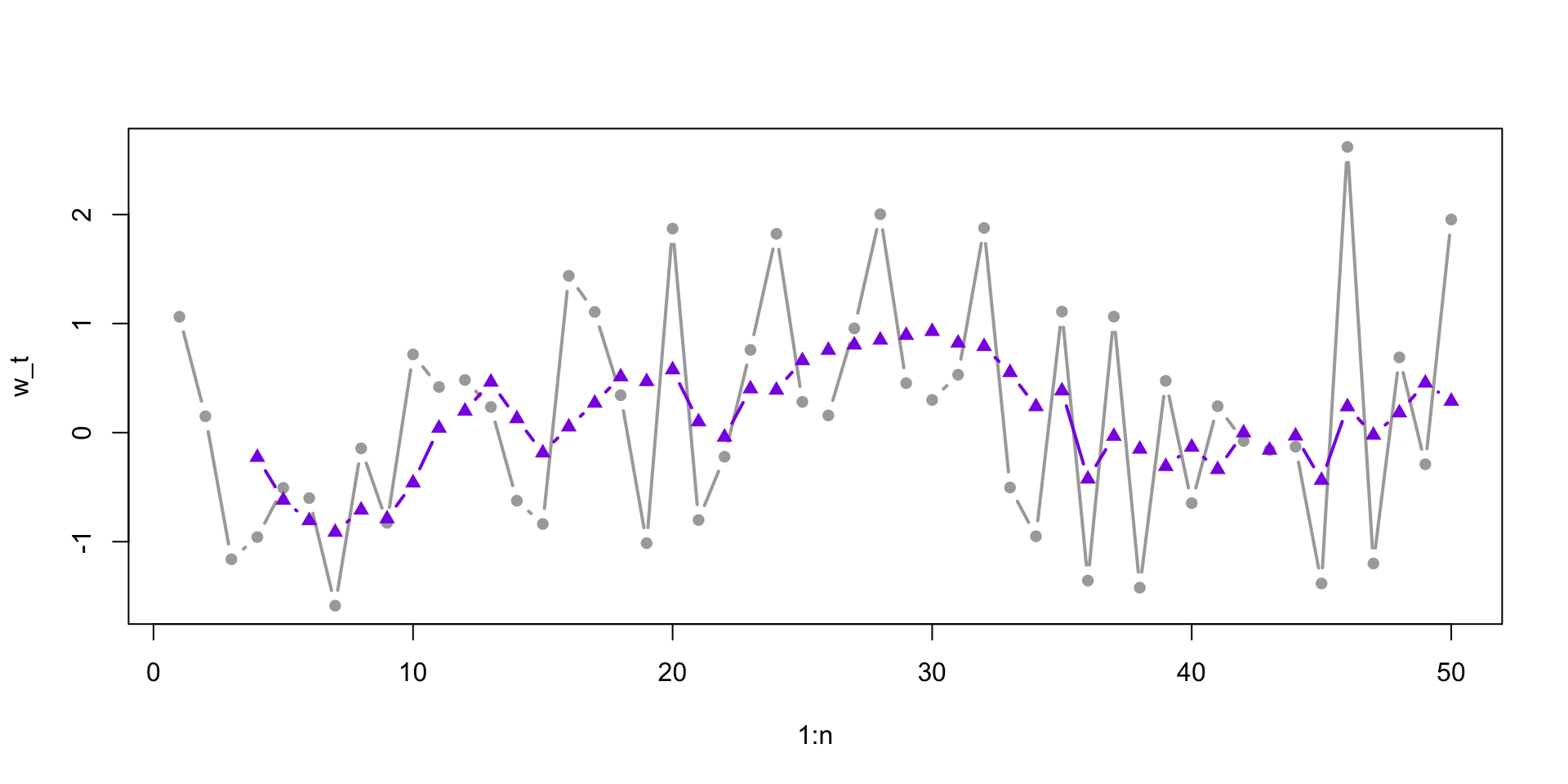

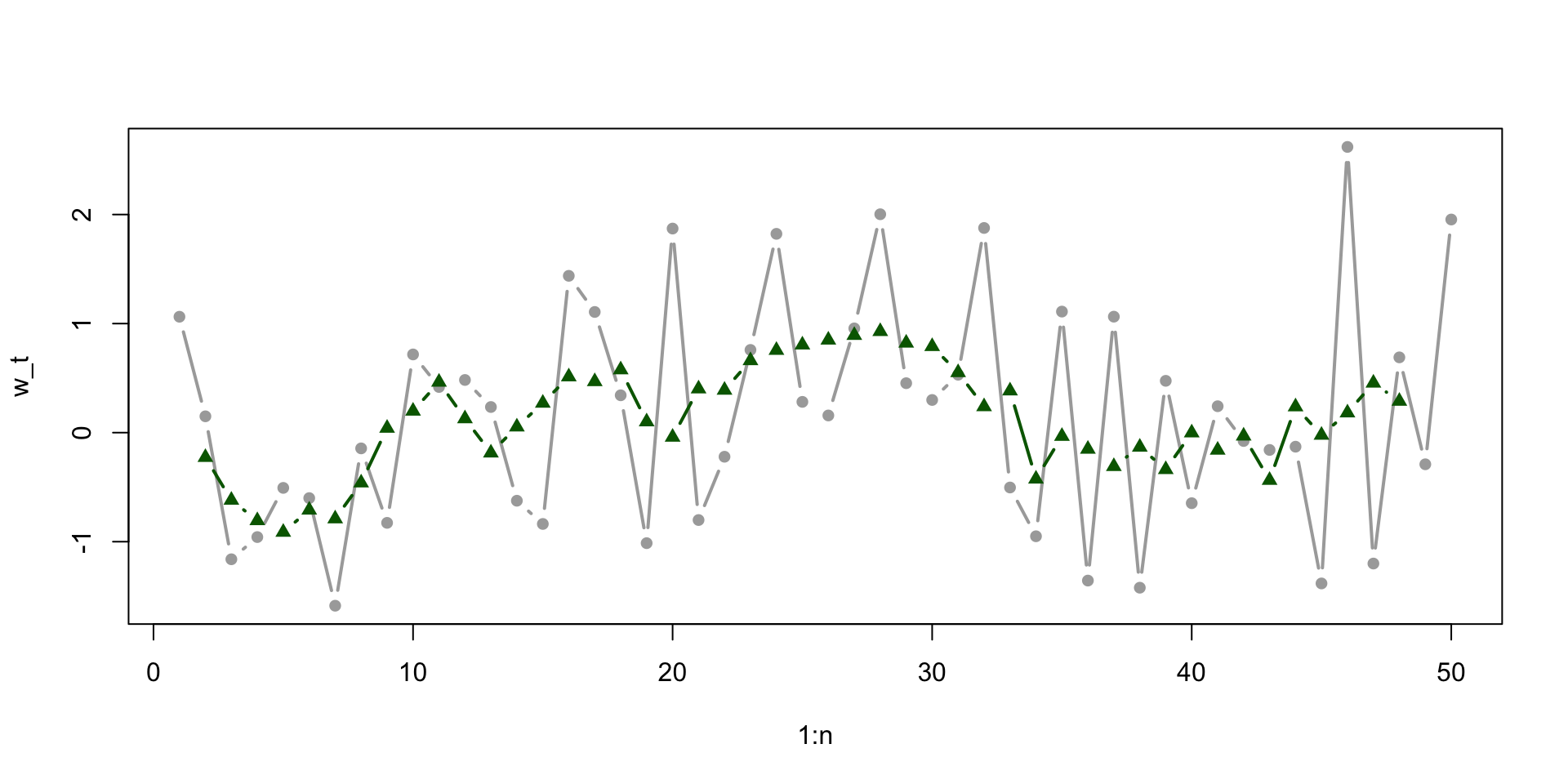

v_t w_t w_t1 w_t2

[1,] NA -0.834 NA NA

[2,] NA 0.799 -0.834 NA

[3,] 0.043 0.163 0.799 -0.834

[4,] 0.752 1.292 0.163 0.799

[5,] 0.491 0.018 1.292 0.163

[6,] 0.435 -0.006 0.018 1.292

[7,] 0.163 0.476 -0.006 0.018

[8,] 0.652 1.486 0.476 -0.006

[9,] 0.592 -0.186 1.486 0.476

[10,] 0.778 1.034 -0.186 1.486

[11,] -0.016 -0.896 1.034 -0.186

[12,] 0.006 -0.121 -0.896 1.034

[13,] -0.475 -0.408 -0.121 -0.896

[14,] -0.170 0.019 -0.408 -0.121

[15,] -0.164 -0.102 0.019 -0.408

[16,] -0.727 -2.098 -0.102 0.019

[17,] -0.798 -0.195 -2.098 -0.102

[18,] -0.996 -0.697 -0.195 -2.098

[19,] -0.145 0.457 -0.697 -0.195

[20,] 0.225 0.914 0.457 -0.697

[21,] 0.895 1.314 0.914 0.457

[22,] 0.410 -0.998 1.314 0.914

[23,] -0.048 -0.459 -0.998 1.314

[24,] -0.546 -0.181 -0.459 -0.998

[25,] -0.252 -0.116 -0.181 -0.459

[26,] -0.105 -0.017 -0.116 -0.181

[27,] -0.227 -0.547 -0.017 -0.116

[28,] -0.052 0.408 -0.547 -0.017

[29,] -0.231 -0.555 0.408 -0.547

[30,] -0.168 -0.356 -0.555 0.408

[31,] -0.328 -0.074 -0.356 -0.555

[32,] -0.608 -1.393 -0.074 -0.356

[33,] -0.604 -0.345 -1.393 -0.074

[34,] -1.214 -1.904 -0.345 -1.393

[35,] -0.917 -0.503 -1.904 -0.345

[36,] -0.564 0.715 -0.503 -1.904

[37,] 0.173 0.306 0.715 -0.503

[38,] 0.572 0.694 0.306 0.715

[39,] 0.361 0.083 0.694 0.306

[40,] 0.281 0.065 0.083 0.694

[41,] 0.308 0.776 0.065 0.083

[42,] 0.413 0.397 0.776 0.065

[43,] 0.162 -0.686 0.397 0.776

[44,] -0.371 -0.824 -0.686 0.397

[45,] -0.745 -0.725 -0.824 -0.686

[46,] 0.026 1.627 -0.725 -0.824

[47,] 0.733 1.298 1.627 -0.725

[48,] 0.424 -1.653 1.298 1.627

[49,] -0.927 -2.427 -1.653 1.298

[50,] -1.123 0.710 -2.427 -1.653